Artificial intelligence (AI) has become an integral part of our lives, transforming industries and shaping the future of technology. With its increasing prominence, governments worldwide are implementing regulations to ensure the responsible and ethical use of AI. In this blog post, we will dive into the European Union’s (EU) regulations for AI developers, exploring the obligations and requirements imposed on different categories of AI systems.

The EU classifies the Generative AI for Package Design as a Limited Risk AI system and as such “subject to additional transparency obligations. Developers of such systems must ensure transparency in their operations, providing users with clear information regarding the generation or manipulation of content.”

Moreover, there is specific regulation for Generative AI Model Providers:

Generative AI providers have additional obligations, building upon the requirements for foundation model developers. They must disclose when content (text, video, images, etc.) has been artificially generated or manipulated, adhering to transparency obligations outlined in Article 52. Furthermore, generative AI providers must implement safeguards to prevent the generation of content that violates EU law. Additionally, generative AI model providers must make publicly available a summary disclosing the use of training data protected under copyright law.

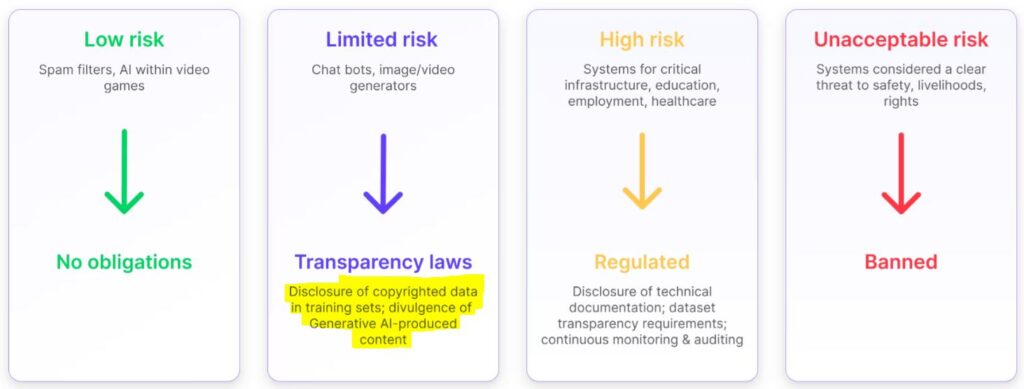

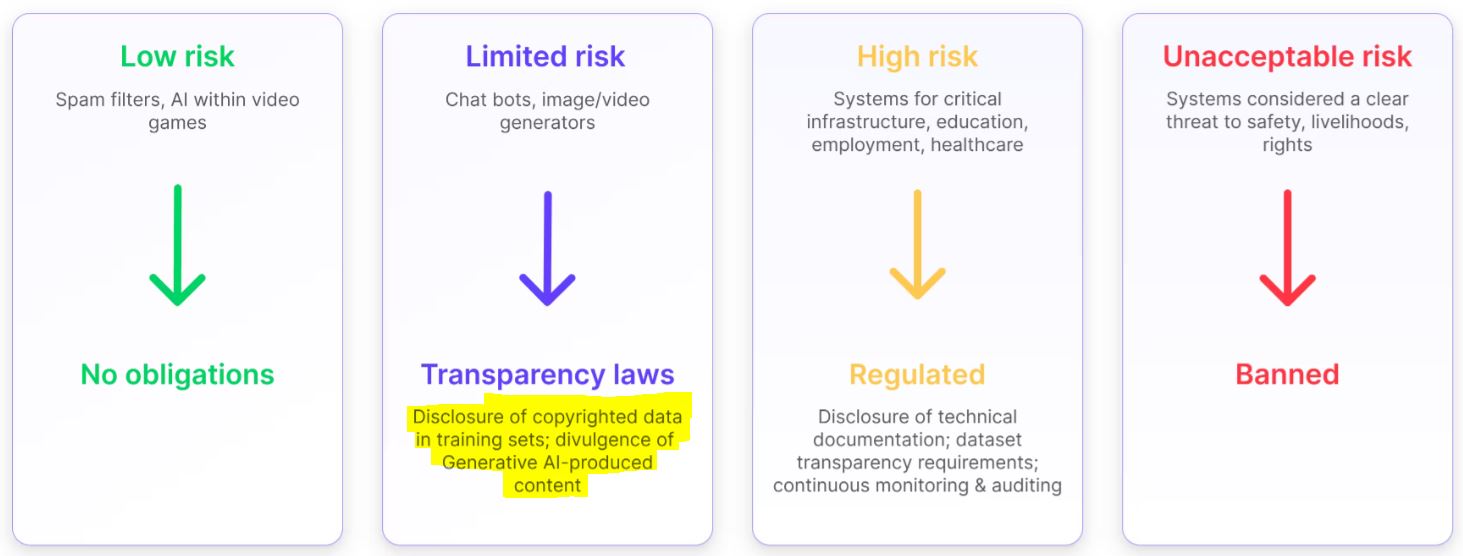

The EU’s Risk-Based Approach to AI

The EU has adopted a risk-based approach to regulating AI systems. This approach categorizes AI systems into different risk levels, determining the extent of legal requirements and intervention. Let’s take a closer look at these risk categories:

Minimal/No-Risk AI Systems:

AI systems that fall into the minimal or no-risk category, such as spam filters and AI within video games, are permitted without any restrictions. Developers of these systems can operate freely within the EU market.

Limited Risk AI Systems:

Limited risk AI systems, including image/text generators, are subject to additional transparency obligations. Developers of such systems must ensure transparency in their operations, providing users with clear information regarding the generation or manipulation of content.

High-Risk AI Systems:

High-risk AI systems, such as those used in recruitment, medical devices, and recommender systems for social media platforms, require compliance with specific AI requirements and conformity assessments. The obligations for high-risk AI systems are more extensive and rigorous. We will delve deeper into these requirements shortly.

Unacceptable Risk Systems:

AI systems falling into the unacceptable risk category are prohibited. These systems pose significant risks to individuals’ rights, safety, and well-being, and their development and deployment are not permitted within the EU.

Obligations for Different AI System Developers:

General-Purpose AI (GPAI) Developers:

For most AI systems, which are not high-risk, the obligations for GPAI developers primarily revolve around high-risk systems. However, the EU encourages the voluntary application of mandatory requirements for non-high-risk AI systems through the creation of “codes of conduct.”

Developers working on high-risk GPAI must comply with a set of rules, including an ex-ante conformity assessment, risk management practices, testing protocols, and robust training data. Article 10, in particular, highlights the importance of relevant, error-free, complete, and representative training, validation, and testing datasets.

GPAI providers must register their systems in an EU-wide database and appoint an authorized representative for compliance and post-market monitoring.

Foundation Model Providers:

The EU imposes stricter requirements on foundation model developers compared to GPAI developers. There is no notion of minimal/no-risk systems for foundation models. Developers must ensure risk management, data governance, and the robustness of the foundation models through independent expert vetting and extensive documentation, analysis, and testing.

Similar to high-risk systems, foundation model providers must implement quality management systems, register their models in the EU database, and disclose computing power requirements and total training time.

Conclusion:

The EU’s regulations for AI developers aim to strike a balance between innovation and protecting individuals’ rights and well-being. By adopting a risk-based approach, the EU ensures that AI developers comply with specific obligations according to the risk level of their systems. There is specific regulation for Generative AI Model Providers that will need to be implemented by these providers.

Leave a Reply